A (Very) Brief History of AI

Why it feels like AI came out of nowhere and where it’s headed

The Early Days of AI

Artificial Intelligence (AI) may seem like a brand-new thing, but it actually started way back in the 1950s when a handful of scientists began toying with the idea of creating machines that could “think”. In 1956, at a conference at Dartmouth College (New Hampshire, United States), the term “artificial intelligence” was coined. These early AI efforts were a mix of enthusiasm and limitations—researchers built programs that could solve maths problems or play basic games, but the technology simply wasn’t powerful enough to do anything close to real “thinking.”

AI got its first big surge in the 1980s with “expert systems” designed to make decisions in fields like medicine or finance. The goal was to emulate human decision-making. But here’s the thing: these systems were clunky and inflexible, stuck in strict sets of rules that couldn’t adapt to new situations. When these high hopes led to limited results, AI hit what’s now known as the “AI winter”—a sort of industry hibernation where interest and funding dried up.

Why AI “Suddenly” Exploded

So, why does it feel like AI suddenly showed up out of nowhere, tackling everything from chatbots to music composition, all while sounding remarkably human? If you weren’t following the research and tech developments, it did seem like AI just appeared 'overnight'. But the reality is that this “overnight” success was decades in the making:

Data Boom: Thanks to the internet, we suddenly had a massive amount of data. AI feeds on data, and by the early 2000s, it had more than enough to chew on.

Processing Power: GPUs (Graphics Processing Units) got cheaper and way more powerful, enabling AI to process enormous datasets and train complex models far quicker than before.

Deep Learning: Advances in neural networks, especially deep learning, allowed AI to break out of rigid rules and start recognising complex patterns by itself. These models, inspired loosely by the human brain, only get better with every dataset fed into them. That’s how we got big leaps in things like image recognition, language processing, and more.

These developments and a serious cash injection from tech giants and hopeful investors fueled an era of AI tools and applications that seemed to, at least for us 'normies', pop up out of nowhere.

Why Are There Suddenly So Many Language Models?

Nowadays, it feels like every tech company has its own AI model, each claiming to be more advanced. This explosion is mainly because of open research and the massive potential to make money with these models:

Open-source Foundations: Models like GPT (developed by OpenAI) set the stage. Once GPT-2 was released, developers worldwide saw the potential and started making their own versions, improving the core model and adding unique twists.

Corporate Competition: Big players like Google and Meta (Facebook) recognised the business potential in these models for everything from customer service to data analytics. That led to rapid development as everyone tried to get in on the action.

Lowered Costs: Cloud computing and data became more accessible, so even smaller companies could afford to train or tweak their own models, contributing to the explosion of new language models on the market.

Is This True AI?

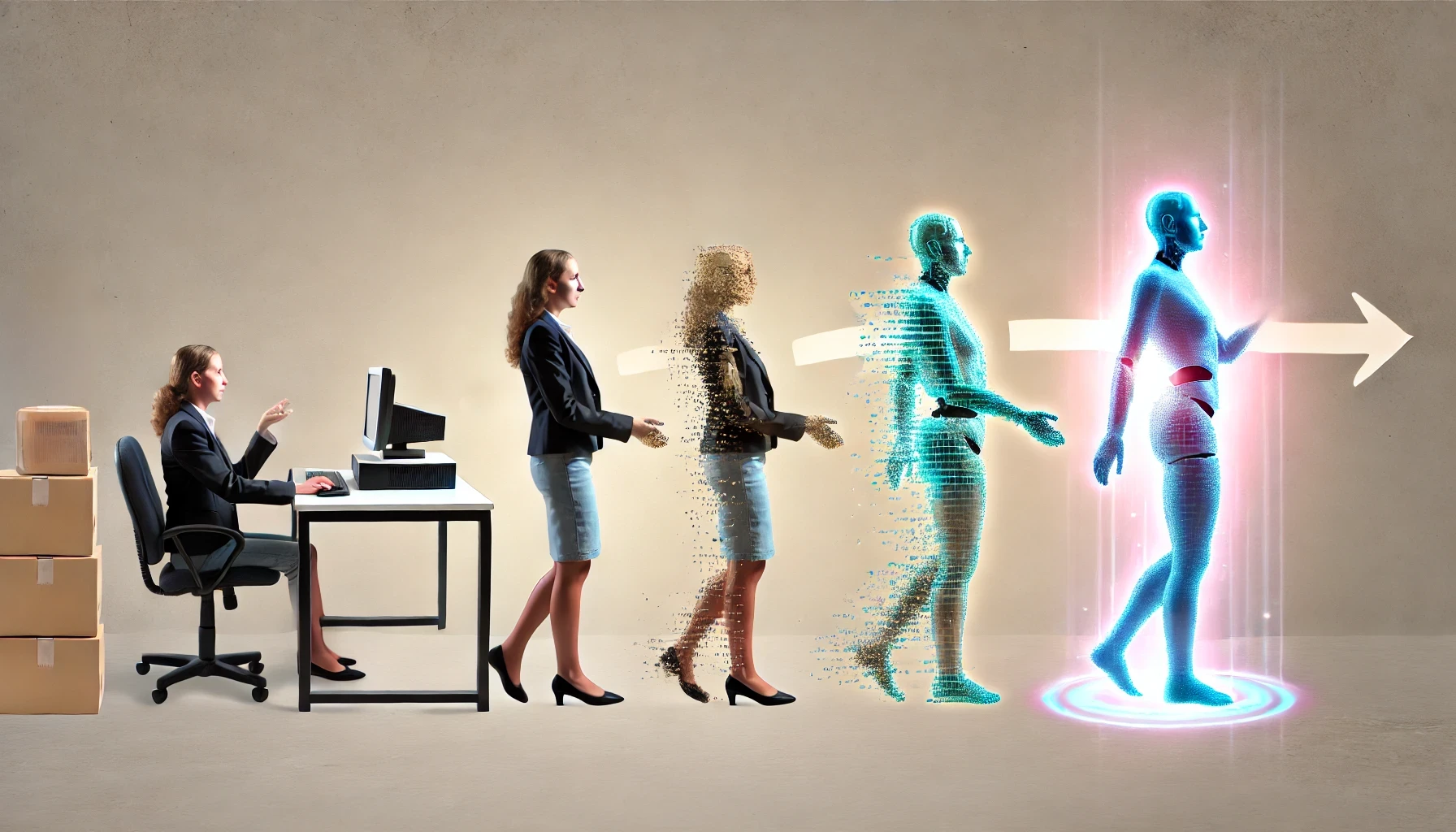

When we think about AI in sci-fi terms, we picture something that can understand, reason, and act just like a human—a kind of artificial mind that “gets” the world. Today’s AI, called “narrow AI,” isn’t quite there yet. It’s trained to perform specific tasks (like generating text, identifying images, or analysing data) but has no fundamental understanding or awareness. Models like ChatGPT sound human, but they’re essentially very sophisticated pattern matchers working off massive datasets, not actually “thinking” as we do.

The Future of AI: True AI and When Will It Happen?

The concept of “True AI,” or Artificial General Intelligence (AGI), would mean creating a system capable of understanding, learning, and reasoning at human levels. Estimates vary wildly, with some experts saying we’re 20 years away and others suggesting it could take 100 years to reach AGI, depending on breakthroughs in both computing and our understanding of human cognition. Still, others think AGI may even be reached within this decade!

To get there, three key hurdles need to be crossed:

Understanding Consciousness: We still don’t fully understand how human consciousness or general intelligence works. Without that, replicating it in a machine is no easy task.

Ethical and Safety Challenges: Creating an AI with human-like reasoning raises complex ethical and control issues. It’s one thing to design a model that recommends movies and another to create one that could make moral decisions or set its own goals.

Power and Data Efficiency: Current AI systems are incredibly hungry for data and computing power. To develop an AGI that can adapt and learn without continuous retraining, we’ll need huge improvements in computing efficiency.

Final Thoughts

AI will continue to evolve and integrate itself into more areas of our lives, powering everything from search results to personalised streaming recommendations. This “narrow AI” will keep improving and may soon feel like an everyday essential, even if it still lacks true understanding. The leap to AGI, or “true AI,” is speculative but promises profound changes for society.

If AI felt like it appeared out of nowhere, just know it’s only going to keep accelerating from here.

Please support my work and enable me to keep writing content like this by tapping here: BuyMeACoffee